|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

|

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Flatten, Dense, Dropout

import matplotlib.pyplot as plt

# raw 데이터 생성

x_data = np.arange(0, 365, 0.1)

y_data = 2 * np.sin(1.2 * x_data) - np.cos(x_data / 1.5) + 1 # np.array(3650,)

y_data = y_data.reshape(-1, 1) # np.array(3650,) -> np.array(3650, 1)

## y_data 확인

# plt.grid()

# plt.plot(y_data)

# plt.show()

# 데이터셋 생성

window_size = 30

horizon_factor = 1

def makeDataset(y_data, window_size, horizon_factor):

temp_x = []

temp_y = []

for i in range(len(y_data) - (window_size + horizon_factor) + 1):

x = y_data[i:(i + window_size)]

y = (y_data[i + window_size + horizon_factor - 1])

temp_x.append(x)

temp_y.append(y)

return np.array(temp_x), np.array(temp_y) # (4, 5) -> (1, 4, 5)

x_train, y_train = makeDataset(y_data, window_size, horizon_factor) # shape : (3620, 30, 1), (3620, 1)

split_ratio = 0.75

train_num = int(len(x_train) * split_ratio)

x_val = x_train[train_num:]

y_val = y_train[train_num:]

x_train = x_train[0:train_num]

y_train = y_train[0:train_num]

# 모델 생성

def make_model():

model = Sequential()

model.add(LSTM(units=32, return_sequences=True, input_shape=(30, 1)))

model.add(LSTM(units=32, recurrent_dropout=0.2))

model.add(Dense(8, activation='relu'))

model.add(Dense(1))

return model

model = make_model()

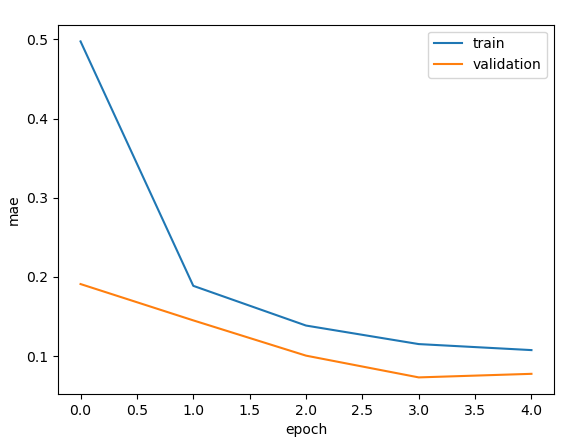

model.compile(loss='mse', optimizer=tf.keras.optimizers.RMSprop(), metrics=['mae'])

history = model.fit(x_train, y_train, epochs=5, validation_data=(x_val, y_val))

# 훈련의 정확도 확인

plt.plot(history.history['mae'])

plt.plot(history.history['val_mae'])

plt.xlabel('epoch')

plt.ylabel('mae')

plt.legend(['train', 'validation'], loc='best')

plt.show()

|

cs |

양방향 LSTM

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

|

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Bidirectional, Flatten, Dense, Dropout

import matplotlib.pyplot as plt

# raw 데이터 생성

x_data = np.arange(0, 365, 0.1)

y_data = 2 * np.sin(1.2 * x_data) - np.cos(x_data / 1.5) + 1 # np.array(3650,)

y_data = y_data.reshape(-1, 1) # np.array(3650,) -> np.array(3650, 1)

## y_data 확인

# plt.grid()

# plt.plot(y_data)

# plt.show()

# 데이터셋 생성

window_size = 30

horizon_factor = 1

def makeDataset(y_data, window_size, horizon_factor):

temp_x = []

temp_y = []

for i in range(len(y_data) - (window_size + horizon_factor) + 1):

x = y_data[i:(i + window_size)]

y = (y_data[i + window_size + horizon_factor - 1])

temp_x.append(x)

temp_y.append(y)

return np.array(temp_x), np.array(temp_y) # (4, 5) -> (1, 4, 5)

x_train, y_train = makeDataset(y_data, window_size, horizon_factor) # shape : (3620, 30, 1), (3620, 1)

split_ratio = 0.75

train_num = int(len(x_train) * split_ratio)

x_val = x_train[train_num:]

y_val = y_train[train_num:]

x_train = x_train[0:train_num]

y_train = y_train[0:train_num]

# 모델 생성

def make_model():

model = Sequential()

model.add(Bidirectional(LSTM(units=32, return_sequences=True, input_shape=(30, 1))))

model.add(Bidirectional(LSTM(units=32, recurrent_dropout=0.2)))

model.add(Dense(8, activation='relu'))

model.add(Dense(1))

return model

model = make_model()

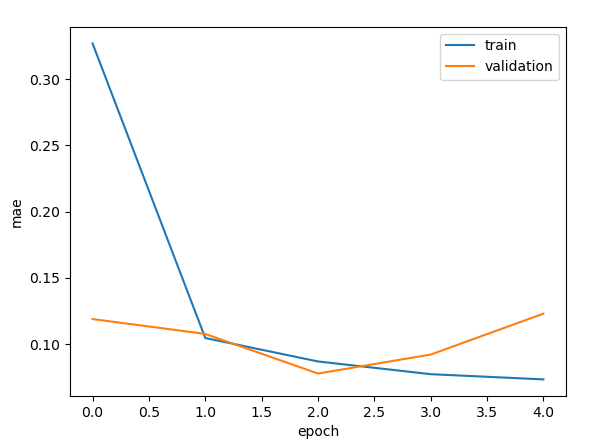

model.compile(loss='mse', optimizer=tf.keras.optimizers.RMSprop(), metrics=['mae'])

history = model.fit(x_train, y_train, epochs=5, validation_data=(x_val, y_val))

# 훈련의 정확도 확인

plt.plot(history.history['mae'])

plt.plot(history.history['val_mae'])

plt.xlabel('epoch')

plt.ylabel('mae')

plt.legend(['train', 'validation'], loc='best')

plt.show()

|

cs |

GRU

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

|

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, GRU, Flatten, Dense, Dropout

import matplotlib.pyplot as plt

# raw 데이터 생성

x_data = np.arange(0, 365, 0.1)

y_data = 2 * np.sin(1.2 * x_data) - np.cos(x_data / 1.5) + 1 # np.array(3650,)

y_data = y_data.reshape(-1, 1) # np.array(3650,) -> np.array(3650, 1)

## y_data 확인

# plt.grid()

# plt.plot(y_data)

# plt.show()

# 데이터셋 생성

window_size = 30

horizon_factor = 1

def makeDataset(y_data, window_size, horizon_factor):

temp_x = []

temp_y = []

for i in range(len(y_data) - (window_size + horizon_factor) + 1):

x = y_data[i:(i + window_size)]

y = (y_data[i + window_size + horizon_factor - 1])

temp_x.append(x)

temp_y.append(y)

return np.array(temp_x), np.array(temp_y) # (4, 5) -> (1, 4, 5)

x_train, y_train = makeDataset(y_data, window_size, horizon_factor) # shape : (3620, 30, 1), (3620, 1)

split_ratio = 0.75

train_num = int(len(x_train) * split_ratio)

x_val = x_train[train_num:]

y_val = y_train[train_num:]

x_train = x_train[0:train_num]

y_train = y_train[0:train_num]

# 모델 생성

def make_model():

model = Sequential()

model.add(GRU(units=32, return_sequences=True, input_shape=(30, 1)))

model.add(GRU(units=32, recurrent_dropout=0.2))

model.add(Dense(8, activation='relu'))

model.add(Dense(1))

return model

model = make_model()

model.compile(loss='mse', optimizer=tf.keras.optimizers.RMSprop(), metrics=['mae'])

history = model.fit(x_train, y_train, epochs=5, validation_data=(x_val, y_val))

# 훈련의 정확도 확인

plt.plot(history.history['mae'])

plt.plot(history.history['val_mae'])

plt.xlabel('epoch')

plt.ylabel('mae')

plt.legend(['train', 'validation'], loc='best')

plt.show()

|

cs |

반응형

'머신러닝_딥러닝 > Tensorflow + Keras' 카테고리의 다른 글

| GPU 서버, 사용 방법 (0) | 2021.11.27 |

|---|---|

| (Tensorflow 2.x) LSTM 연습 1탄 (테슬라 주가 예측) (0) | 2021.11.14 |

| (Tensorflow 2.x) RNN (0) | 2021.11.14 |

| (Tensorflow 2.x) 커스텀 Loss function (0) | 2021.11.14 |

| (Tensorflow 2.x) 함수형 API (0) | 2021.10.23 |